In what may seem a slightly unusual move for a law firm that has very openly backed the use of legal AI technology, including making an investment in the UK’s Luminance, top M&A firm Slaughter and May has published a report about the potential downsides of using AI.

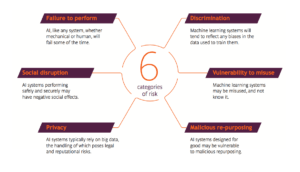

The report, made with ASI Data Science, gives a very thorough and in places quite imaginative account of all the ways that using AI (in general, not just for lawyers) could create a wide variety of problems.

The report can be found here. Artificial Lawyer is, perhaps predictably, not a huge fan of ‘AI = doom’ theories, but the Slaughter and May report is, as one would expect, very well done. It’s also a lot more balanced than the usual ‘naughty algorithms will ruin everything’ type of piece.

In which case, I have taken the liberty of presenting a few of the choice quotes here, which include:

‘The costs of getting AI implementation wrong could be great – and this could include human, social and political costs as much as economic costs: organisations risk meaningful losses, fines and reputational damage if the use of AI results, for example, in unintended discrimination, misselling or breach of privacy.

It is thus increasingly critical for boards and leaders to consider carefully not only how adoption of technology such as AI can deliver ef ciencies and cost savings, but also to consider carefully how the associated risks can be managed properly. In an AI context in particular, this is undoubtedly

a challenge for managers who typically will not yet have the knowledge and tools available to evaluate the size and shape of the risks in an AI system, let alone to manage them effectively. The absence of best practices or industry standards also makes it hard to benchmark what the safe and responsible use of AI looks like in a business environment.

We are keen advocates of AI and we believe it has truly transformative potential in both the public and private sector. We would like to see organisations take a thoughtful and responsible approach to their implementation of AI systems. Unfortunately, while much airtime has been given to the potential benefits of AI technologies, there has yet to be significant attention devoted to the risks and potential vulnerabilities of AI.’

And,

‘We expect the ability of computers to continue to grow. Fundamentally, the human brain is a computer on a biological substrate, and so ultimately we should not expect there to be any tasks performed by humans that remain outside of the capability of computers. This looming breakthrough may be closer than intuition suggests.

The World Economic Forum reported in its Global Risks Report for 2017 that global investment in AI start-ups has risen astronomically from USD282 million in 2011 to just short of USD2.4 billion in 2015. Figures published recently by Bank of America Merrill Lynch suggest that the global market for AI-based systems will reach a value of USD153 billion by 2020; more money will be invested into AI research in the next decade than has been invested in the entire history of the field to this point.’

And, all kinds of stuff can go wrong…….

My favourite is the ‘malicious repurposing’, which is described as:

‘…one of the great features of some AI systems is their adaptability. Adaptability in terms of an AI system being able to adjust its reasoning and responses based on exposure to new data, but also the relative ease with which generic AI algorithms can be re-purposed and applied in different contexts.

While this white paper focuses primarily on systems designed to achieve benign or beneficial purposes, it is worth bearing in mind the risk that a system could be repurposed in the wrong hands from a benign to a malignant use. For example, recently a facial recognition system used to identify biomarkers of disease was controversially repurposed for identifying biomarkers of criminality. One can equally see that systems for analysing financial market activity might be capable of repurposing to disrupt those same financial markets.’

And, in conclusion:

‘New technologies commonly raise questions of regulation. The ethics and laws surrounding new elds of innovation have often developed alongside the potential of the new field itself – biotech and genomics is a good example where ethical concerns have needed to take precedence over technical progress. Artificial Intelligence is no different in raising questions about governance but, because it relates to thought and the agency of human judgement, it presents a unique challenge.’

Plus, businesses will need to consider plenty of potential regulatory factors, including:

- Has the business accepted AI as part of its risk register?

- Does the business have real-time monitoring and alerting for security and performance?

- Is the causal process auditable?

- Is there an accountability framework for the performance and security of the algorithm?

- Are the algorithmic predictions sufficiently accurate in practice?

- Has the algorithm been fed a healthy data diet?

- Is the algorithm’s objective well-specified and robust to attack or distortion?

- Is the algorithm’s use of personal data compliant with privacy and data processing legislation

- Does the algorithm produce discriminatory outputs?

- Have you considered what is a fair allocation of risk with your counterparties?

And there’s more, but you’ll just have to have a read.

To be fair to Slaughters, although this report may be part of a marketing effort to drum up some very interesting advisory work on AI risk, it is a balanced and well-informed report that avoids hysteria.

1 Trackback / Pingback

Comments are closed.