Legal research pioneer, Casetext, has said that it has used GPT-3 in relation to its Compose legal brief creation tool, primarily to help rank judge-generated text. It follows the news from yesterday, reported by Artificial Lawyer, that Joshua Browder’s DoNotPay platform is also working with GPT-3 to create new products for the A2J and consumer rights system.

Why is this a big deal? It matters as it shows that companies already established in the legal tech space are using the software, which is still not open to all yet – which is ironic as the company behind it is called OpenAI.

Pablo Arredondo, Co-Founder and Chief Product Officer at Casetext, told Artificial Lawyer: ‘Yes, GPT-3 plays a role in Compose, which is distinct from the Parallel Search functionality (see below).

‘GPT-3 helps us to assemble the precedents that support the legal standards you find in the Argument List part of the product.’

However, he added that although it’s helping with assembly of the output in Compose, so far they are not using it for ‘text generated directly by GPT-3’.

He went on to say: ‘We use it to help rank actual judge generated text as part of the human driven editorial process.’

So, there we go. A real world use case already alive and happening. Although, as Alex Hudek at Kira Systems explained – see article from today – there remain several issues with using GPT-3, especially for legally sensitive matters.

Meanwhile, the team at Casetext have also developed a research function that operates with a Transformer-based neural net – although it’s not through the GPT-3 system they are using elsewhere.

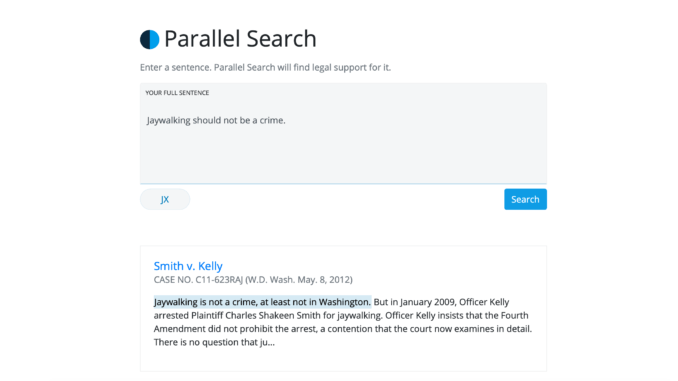

The key point is that it operates on a semantic and ‘conceptual’ basis, rather than keywords – or at least that is the aim. It’s called Parallel Search – and you can follow that link to test it out.

As Arredondo explained: ‘If you are a litigator….[you can] use a full sentence as the query and note how the results will often contain different articulations of the same concept. [It uses a] Transformer-based neural net, trained on the entire common law [in the US].’

In addition he told Artificial Lawyer: ‘Parallel Search does not use GPT-3. It was developed entirely in-house at Casetext.’

He added that although they’re drawing people’s attention to it now, this free release is more of a basic interface, based on tech they’ve been working on for about two years. And hence – although AL calls this something of an experiment in the video below – they note they don’t see this as an experiment.

Here is a product demo AL did this morning. Check it out. (Naturally, AL’s inputs are not the work of a trained lawyer, but hopefully they help to show the semantic nature of the search.)

—

AL found it very interesting, even if it needs some more work. The most exciting part was how it genuinely seemed to be pulling up concepts from case law, sometimes without reference to all the keywords that were inserted into the search.

What do you reckon? Is this useful? Does it differ from what is out there already? Give it a go and tell us what you think.