A draft document setting out EU plans to fine businesses up to €20m or 4% of global revenue for ‘bad AI’ tools, promises a lot of new work for lawyers – but also potentially some regulatory headaches for the legal tech sector, as for example the use of AI systems that help judges are explicitly singled out.

The document, which was first leaked to Politico and is now doing the rounds, is entitled ‘Regulation on a European Approach for Artificial Intelligence’. The EU has been threatening to ‘do something about AI‘ for some time, but this is a lot more heavy-handed than some may have hoped for.

It’s also a recipe for a massive overreach in terms of software regulation – largely because their use of the term ‘AI’ – which is defined mainly via possible outcomes – is so broad it looks to this site to effectively mean ‘any software where a decision is made automatically that may affect a person or business’. The goal of the EU is then to focus on the ones that may cause the most potential harm and/or impinge on human rights.

The problem there is that automated decision-making is already widespread, and most of it is benign. Naturally, they are focused on what they call ‘high risk AI’ systems, (see below), and these are things such as recruitment tools, but they also explicitly name ‘AI systems intended to be used to assist judges at court, except for ancillary tasks’.

It will see the creation of a European Artificial Intelligence Board, while the rules, once they have moved through the EU’s law-making apparatus, are meant to then be copied over into the legislation of each member state.

Now, you may say: ‘This is not going to impact the legal world, this is really just super-high risk things.’ But, the remit is so wide in this draft document it could potentially capture some legal tech tools as well – in part, as mentioned, because the definition of AI appears to this site to be ‘anything that makes a decision that is software and that could impact someone’.

For example, look at some of the target ‘high risk’ areas the rules are meant to cover – which is just the top end of what they are focused on:

(f) AI systems intended to be used for making individual risk assessments, or other predictions intended to be used as evidence, or determining the trustworthiness of information provided by a person with a view to prevent, investigate, detect or prosecute a criminal offence or adopt measures impacting on the personal freedom of an individual;

(h) AI systems intended to be used for the processing and examination of asylum and visa applications and associated complaints and for determining the eligibility of individuals to enter into the territory of the EU;

(i) AI systems intended to be used to assist judges at court, except for ancillary tasks.

As you can see, this clearly ventures onto the edges of legal tech. For example, take point ‘f’ – AI systems that may help produce evidence. What about litigation analysis tools – which both small and large companies provide, including Thomson Reuters and LexisNexis? Automated analysis of case law that makes decisions about what to show you could result in material submitted to court that impacts a person or business, and could presumably come under this rule. Or if not, how will they create a dividing line? Will they explicitly exclude research tools and litigation outcome prediction systems from these rules?

And what about eDiscovery tools? Many are based on NLP, which we can classify as ‘AI’ and as ‘making decisions’. How will the EU handle that?

Then there is part ‘i’ about tools that help judges. In reality a judge may make reference to plenty of legal tech tools that have an automated decision component to find more information about a case, or related past cases.

Clearly the idea here is to stop courts from using automated systems to assign guilt to someone. But, again, how will they draw the line…?

How do each European country’s bureaucrats, who may have very little experience with legal tech tools, nor perhaps be experts in the many variations of decision-making software, decide what is OK, and what needs to go through a potentially complex compliance regime, and also what is to be banned?

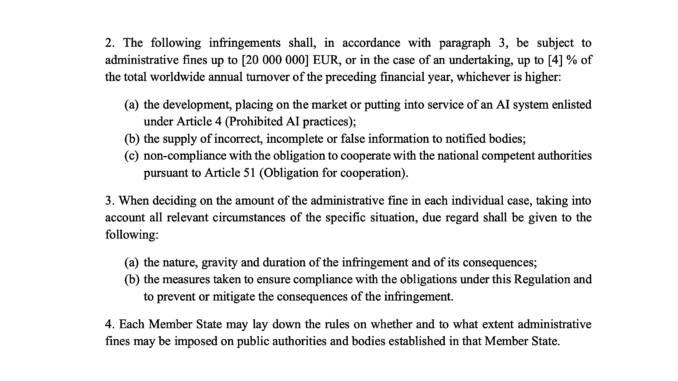

And, given you can get hit by a fine of up to 4% of revenue, or up to €20 million ($24m), for failing to conform to the rules, this may well give some legal tech companies a moment to pause.

The draft rules also talk about the need for a ‘conformity assessment body’ that will handle matters ‘including calibration, testing, certification and inspection’ of AI tools.

Now, again, some may believe this will have no impact on a modest NLP-driven system in the legal world. But, who is going to be making those decisions? Who decides who gets dragged into this ‘conformity assessment’ regime? Maybe they’ll just call in everyone who has the letters ‘AI’ on their website and then ask them to prove they are not subject to the rules?

The one saving grace here is that the EU, for now, wants to focus on systems that may relate to:

(a) injury or death of a person, damage of property;

(b) systemic adverse impacts for society at large, including by endangering the functioning of democratic processes and institutions and the civic discourse, the environment, public health, [public security];

(c) significant disruptions to the provision of essential services for the ordinary conduct of critical economic and societal activities;

(d) adverse impact on financial[economic], educational or professional opportunities of persons;

(e) adverse impact on the access by a person or group of persons to public services and any form of public assistance;

(f) adverse impact on fundamental rights [as enshrined in the Charter], in particular on the right to privacy, right to data protection, right not to be discriminated against, the freedoms of expression, assembly and association, personal freedom, right to property, right to an effective judicial remedy and a fair trial and right to international protection [asylum] [longer list of rights can be specified if necessary]

But….even here, as you can see in ‘f’, anything that can have an ‘adverse impact [on]…an effective judicial remedy and a fair trial’ is a target – and again, how will they decide that? What if lawyers demand the opposing counsel, or an inhouse legal team, reveal all the legal tech tools that have any kind of ‘AI’ element they may have used that may relate to a matter?

Conclusion

These are draft rules, and in a leaked document, so they need to be taken with some caution, but this site has seen enough EU documents over the years to feel confident this is the real thing – even if it is a draft and may change a lot in the months and years ahead.

The aim here is to go after companies that use software to make decisions that really harms people, e.g. a clearly discriminatory recruitment system, not a tool that helps you to find a change of control clause as part of an M&A deal.

But…..as explored above, the rules are so wide-ranging, so determined to have some regulatory and compliance approval power over the software sector, that companies that really should not be targeted could get sucked into this, especially where they are connected to case law and court processes.

Even having to comply with some kind of regulatory ‘AI kitemark’ would be a burden for any legal tech company operating in the EU. Although that is likely to be the worst outcome for most. But, in a very small number of cases we could however see tools that make decisions for lawyers, or especially judges, perhaps related to research and legal data sorting, that could be seen as influencing the outcome of a case, and therefore would unintentionally end up in the high-risk category.

Overall, this site has a lot of support for regulating automated decision systems that reject people from jobs, for example, without even interviewing them in person, or that refuse credit based on an obscure algorithm. But, the risk here is that what they are tackling is so huge, so broad, that it could potentially end up impacting some legal tech companies – something that in the past had always seemed unlikely.

How this all develops remains to be seen, but if this draft set of rules is anything to go by, then the legal tech sector, especially that part of it involved in litigation, has just received a warning shot.

—

The full high risk AI list:

(a) AI systems intended to be used to dispatch or establish priority in the dispatching of emergency first response services, including by firefighters and medical aid;

(b) AI systems intended to be used for the purpose of determining access or assigning persons to educational and vocational training institutions, as well as for assessing students in educational and vocational training institutions and for assessing participants in tests commonly required for admission to educational institutions;

(c) AI systems intended to be used for recruitment – for instance in advertising vacancies, screening or filtering applications, evaluating candidates in the course of interviews or tests – as well as for making decisions on promotion and termination of work-related contractual relationships, for task allocation and for monitoring and evaluating work performance and behaviour;

(d) AI systems intended to be used to evaluate the creditworthiness of persons;

(e) AI systems intended to be used by public authorities or on behalf of public authorities to evaluate the eligibility for public assistance benefits and services, as well as to grant, revoke, or reclaim such benefits and services;

(f) AI systems intended to be used for making individual risk assessments, or other predictions intended to be used as evidence, or determining the trustworthiness of information provided by a person with a view to prevent, investigate, detect or prosecute a criminal offence or adopt measures impacting on the personal freedom of an individual;

(g) AI systems intended to be used for predicting the occurrence of crimes or events of social unrest with a view to allocate resources devoted to the patrolling and surveillance of the territory;

(h) AI systems intended to be used for the processing and examination of asylum and visa applications and associated complaints and for determining the eligibility of individuals to enter into the territory of the EU;

(i) AI systems intended to be used to assist judges at court, except for ancillary tasks.

—

And here are some of the obligations for AI tool providers:

Obligations of providers of high-risk AI systems

1. Providers of high-risk AI systems shall ensure that these systems comply with the requirements listed in Chapter 1 of this Title.

2. Providers of high-risk AI systems shall put in place a quality management system that ensures compliance with this Regulation in the most effective and proportionate manner. The quality management system shall be documented in a systematic and orderly manner in the form of written policies, procedures and instructions and shall address at least the following aspects:

(a) strategy for regulatory compliance, including compliance with conformity assessment procedures and procedures for the management of substantial modifications to the high- risk AI systems;

(b) techniques, procedures and systematic actions that will be used for the design, design control and design verification of high-risk AI systems;

..

(d) examinations, tests and validations procedures that will be carried out before, during and after the development of high-risk AI systems, and the frequency with which they will be carried out;

(e) technical specifications, including standards, that will be applied and, where the relevant harmonised standards will not be applied in full, the means that will be used to ensure that the high-risk AI systems comply with the requirements of this Regulation;

(f) systems and procedures for data management, including data collection, data analysis, data labelling, data storage, data filtration, data mining, data aggregation, data retention and any other operation regarding the data that is performed before and for the purposes of the placing on the market or putting into service of high-risk AI systems

(g) risk management system as set out in in Annex VIII;