A newly launched AI Global Governance commission (AIGG), tasked with forming links with politicians and governments around the world to help develop and harmonise rules on the use of AI, has suggested that at least one key regulation should be that any decisions made by an AI system ‘must be tracked back to a person or an organisation’.

Although the view was only the early product of meetings yesterday ahead of the AIGG launch event, which is backed by the UK Parliament’s APPG AI group and the Big Innovation Centre, it could become something of a standard ethical line for the many legal projects now developing in this area.

Earlier this month the Law Society launched its own Public Policy Commission on Algorithms and Justice, for example, one of several AI ethics initiatives around the world.

Ensuring that any algorithmic decision is traceable and can be tracked back to a person or organisation could provide society with a greater sense that at least someone is responsible for the actions of an automated system, and that important decisions were not being made in a regulatory vacuum and without any recourse for legal action against a party that caused harm to another. In fact, one could argue that not being able to assign responsibility to the actions of an algorithm would in effect undermine the justice system and put AI’s outputs on a par with ‘an act of nature’, i.e. beyond the ability of society to apply rules.

The AIGG meeting also stressed that regulators needed to move a lot faster than they are, nationally and globally, because AI technology and its use was now moving a lot faster in terms of its development and actual use in society.

The APPG AI group, which is behind the global initiative, is chaired by Lord Tim Clement-Jones CBE, who is also a partner in global law firm, DLA Piper, and Stephen Metcalfe MP.

The group mentioned that they are reaching out to governments and politicians around the world and had already gained strong support for co-operation from South Korea, a major tech hub, and Saudi Arabia, which although perhaps not seen as a tech-first nation is now trying hard to build a reputation in tech innovation as it prepares for a more diverse and less petrol-based economy. Other nations in the Middle East are also understood to be interested in joining the initiative. China, France, Canada and India were also highlighted as key countries that the AIGG wanted to work with at this stage.

It’s possible Artificial Lawyer missed its mention, but it was unclear if the US would also be a key part of the global initiative at this stage, which seems highly important given its role as a home for many AI companies and experts.

The AIGG itself is headed by Gosia Loj and Prof. Birgitte Andersen of Big Innovation. While the APPG AI is supported by a number of professional services firms, including: CMS Cameron McKenna Nabarro Olswang; Deloitte; EY; KPMG; and PwC.

In January 2017, Metcalfe and Lord Clement-Jones established the All-Party Parliamentary Group on Artificial Intelligence (APPG AI) with the aim to explore the impact and implications of AI.

Following the first year of evidence meetings the APPG Advisory Board recognised the need for a global response to address emerging AI issues and proposed the establishment of the AIGG.

The co-chairs of the APPG AI have issued an open letter in support of the initiative, recognising that most of the AI-related issues transcend national borders and depend on the cooperation of the global community.

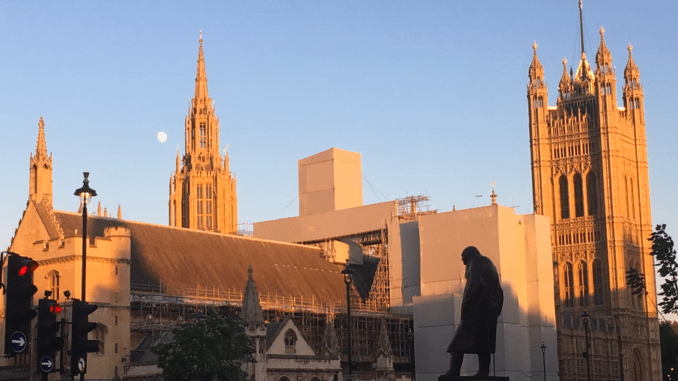

[ Main pic photo credit: RT, ‘Moon rise over Houses of Parliament, with Churchill looking on.’ ]