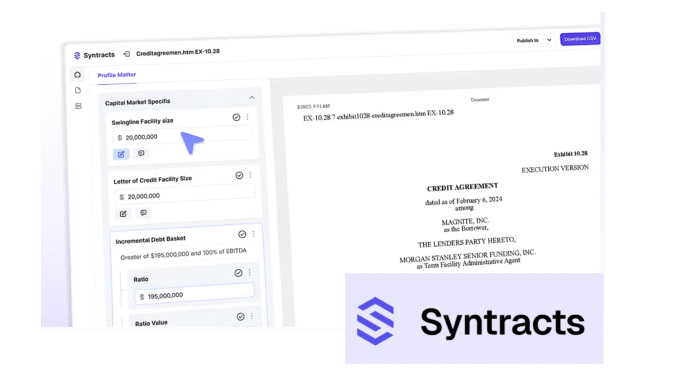

Syntracts, a pioneering on-prem contract AI platform which is using a novel type of data architecture, has raised a $5.3m Seed round led by Hyperplane, (see AL interview with the founders below about how they’re approaching contract analysis from a very different angle).

The US company, which is in A&O Shearman’s Fuse incubator, and whose founders are from Latham & Watkins and Uber AI Labs, provides ‘a secure infrastructure layer that integrates with firms’ existing workflows and AI tools’. Then, through its API, teams can ‘ingest documents, organize them into structured, searchable data, and query results directly inside their systems’.

‘By turning legacy contracts into reliable, searchable knowledge, Syntracts makes a firm’s existing AI tools smarter, faster and more accurate, all while keeping every piece of data private and on-prem,’ they explained

Through its secure API, firms can pull documents from systems like iManage, NetDocs or SharePoint and organize them into structured data. They can then send those results back into the tools they already use, from dashboards to AI assistants, while keeping the information inside their own environment, they added.

To watch the interview with Artificial Lawyer, please press Play. (There is a full AI transcript of the discussion below, which covers their approach to on-prem AI, KM and more.)

–

Doug Bemis, co-founder of Syntracts and former CTO at Uber AI Labs, commented: ‘Our mission is simple: enable law firms to use AI that’s both private and verifiable. Firms don’t need another app — they need a secure data layer that powers their existing AI with clean, structured knowledge from their own contracts.’

While Christopher Martin, co-founder of Syntracts, said: ‘In law, you shouldn’t have to choose between airtight privacy and answers that are consistent and reliable. We’re building the secure AI backbone for the legal industry, one that helps firms unlock the full value of their data safely and at scale. The backing … highlights how critical a privacy-first foundation is to the future of legal AI.’

Other investors included: Khosla Ventures, Myriad Venture Partners, Harvest Capital, Fortitude Ventures, and Point72 Ventures.

More about the company here.

—

—

AI Transcript of AL Interview

Hey everybody, Richard Tromans here again at Artificial Lawyer TV. I’m doing a special interview today with Doug and Chris from Syntracts. They’ve just raised a heap of money and they’ve taken a very original approach unlike many other legal tech companies to AI. Good to have you on my show guys.

First of all, let’s just get straight into this. Let’s talk about the funding. First of all, if you could just tell us a bit about that, how much, who from, what will it be used for?

Doug (01:05.471)

Yeah, super excited to be here. Good to talk to you again, Richard. We have raised a little under $5.5 million, and we’re super excited about the coalition of people that have come together to support us here. So we have a great lead in a place called Hyperplane Ventures. They are a machine learning fund out of Boston. So they’re on their fourth fund, very deep in machine learning.

have also been doing AI since before AI was cool and we’re super excited to have them on board. That’s great. They led the round. We had additional participation in from Coastal Ventures came in this round and our past leads from the previous pre-seed round also doubled down. So that’s 0.72 ventures and myriad ventures. So we’re.

Doug (01:53.377)

We’re super excited. And generally, you know, when we can get into it more, we’ve developed a different type of technology. We are a machine learning company. We use the new technology, but in a different way than most people. And we have developed this new technology. It works well. And this money is now to scale it. So kind of follow up on our initial traction and get it out wider and more effective to more.

Richard Tromans (02:18.778)

I mean, this is an A round, a C round, but what is this? Seed, this is a seed, this is a seed, okay, right, All right, so you got your money, you got your backers, you got the product up and running, and just the viewers who are wondering, okay, so what actually is this? What is so different? Chris, Doug, can you just explain what is this in a nutshell? Why is this different?

Doug (02:21.577)

is a seed round seed. Yeah.

Doug (02:42.613)

Yeah, it’s very different. I guess, Chris, you want to describe just from the point of view of the problem it solves and how it’s shaped differently from a law firm’s perspective, and then I can go into the technology a bit.

Chris Martin (02:55.574)

Yeah, sure. So obviously, Richard, you know this as well as probably all the viewers and readers. But legal AI adoption has grown exponentially. along with that, so have concerns about privacy and accuracy and reliability. And so when I came to Doug with the idea for syntax, basically, I gave him a list of constraints from my time in Big Law.

And then he and I worked to try to solve this. And so what that allows us to do is run entirely on-premise custom models for each customer that each customer ultimately is in control of in a way that you can’t be if you’re relying on OpenAI or Anthropic.

Richard Tromans (03:45.338)

Even if you’re operating, just again for the viewers, so even if you’re operating for a third party legal tech vendor that is then relying on OpenAI or Anthropic, you’re still stuck with this challenge of you’re operating off an external system.

Chris Martin (04:02.05)

That’s right. And if you think about sort an extreme example, every once in a while, Sam Altman tweets, and that’s how the world learns that a new, that one of the models that they’re running has been deprecated. And in some number of days or weeks, everything has to move on to another. Our system, the models that you and your firm train are hosted if you want on your firm’s premises. Otherwise we can also accommodate an Azure VPC.

or pure cloud, but regardless, everything is under your control. No need to, we don’t prompt at all, so no need to change prompts to adapt to a new model style. You simply continue to use the tool, it continues to improve, and any changes that occur are entirely under your control.

Richard Tromans (04:51.988)

Let’s just dig into some of the technical aspects here just for clarity. So is this an LLM? We’re talking about a large language model or are we talking about a completely different technology here?

Doug (05:01.493)

Yeah, so I guess just to reset also what we do, I’ll make sure that that’s set here. So we extract structured information from large contracts and we make that usable for you in any way you would like downstream. so kind of diving in the technical aspect, we live in kind of a weird world currently.

So there have been these kind of magic black boxes that have been developed and kind of anybody has access to them. And there’s a belief that if you just ask them nicely enough, they will give you all the best answers. And everybody is kind of trying to ask as nicely as possible. And generally we view that as not a great exercise or use of time for some as.

For some things, it’s awesome. So we’re not against the large foundational laws. We think they’re exceptionally good for many different things. We can kind of go into where they fit best. But for being experts in particular documents or legal language, we just don’t think kind of like prompting is the right way to go or the most effective way to go. So what we do is we train effectively thousands of experts to each be

precisely smart in a particular small section of a particular document. And we do that by fine tuning small language models using synthetic data.

Richard Tromans (06:22.67)

So I think this is the thing that will probably blow people’s minds if they didn’t read the previous artificial or article about it, that you basically have dozens of small language models, each of which is focused specifically on a particular type of text, a particular type of legal language.

Doug (06:38.431)

Yeah, so essentially there’s been a lot of effort to try to fine tune models to be experts in particular domains. And I think there’s been empirical discoveries that that doesn’t work great if you just throw a bunch of documents in legal or finance and fine tune for them. So we view this more as the the better way to fine tune a model is to create actually a mixture of a ton of different experts and actually teach them to do specific

small, precise things and orchestrate them together. And that’s what we do.

Richard Tromans (07:12.314)

And on the machine learning aspect and also, guess, on the training for specific use cases, I mean, does a firm have the ability to improve on the outputs? And secondly, can they then, you know, let’s say you work with a particular law firm and they’re doing tons and tons of private equity work, which has certain types of document that you’re not going to get in other types of work. And they’re saying, right, this is what we want you to focus on. Can you, so A, as they keep doing that work, will the outputs get better?

So is there a machine learning aspect? then secondly, can you customize specifically to their use case?

Doug (07:47.722)

Yes, so that’s precisely what we created. We’re seeing, think, probably a bunch of prompting fatigue across everybody. So we have created a platform for which you just use it normally to review contracts. As you do, it can retrain whenever it might miss something, and you can add in additional information as soon as it comes to mind and just expand your particular models. So it’s designed to adapt and expand to any data and any documents you

Richard Tromans (08:17.038)

But is it, I mean, how much human input, because if we go back to the old NLP machine learning systems, it was very human intensive. I mean, the human was effectively leading it along by the nose kind of thing. say, know, that’s a bad computer. You don’t do it like that. Do it like this, know, tick tick, tick tick, cross cross, you know, the old layout. Is it going to be a bit like that in that there’ll be a junior associate going, that’s right, that’s wrong, that’s right, that’s wrong and tuning it up or will it be different to that?

Chris Martin (08:46.443)

Only at the very beginning, and I know every vendor says this, but with very few seed examples, this is why we built our system around the use of synthetic data. So instead of requiring hundreds of human labeled examples, we can create thousands from just say a dozen or two dozen labeled examples. And that really lessens the upfront manual labor required to get up and going.

Richard Tromans (08:48.91)

Right.

Richard Tromans (09:13.658)

So just a clarity so people understand. the idea is that you’re going to get better results, more accurate results, very, very few hallucinations, if any, that’s the goal anyway. But the trade off is, because nothing is free, you do have to put some effort in at the beginning.

Doug (09:30.497)

Yeah, so essentially, we believe no free lunch. So yes, so everybody has been sold and would like the idea of a magical oracle that gives them the answer to everything now. And so it’s interesting to kind of continue with that. But yes, you have to put in some upfront work. It depends. We’re developing our own kind of like set of things for which they’ll work out of the box. So if you just wanted that set, then there’s no upfront work necessary. But yes, that’s how it works.

Richard Tromans (09:58.422)

And in terms of the range of documents, it will look at anything, any legal document anywhere, or you’ve got specific verticals that you’re looking at at the moment.

Doug (10:09.217)

Yeah, so this is kind of where I also want to stress we don’t not use the technology. We just use it differently. And LLMs are exceptionally powerful in a bunch of different ways. Most people are used to the chat version of them, but we use them in order to as basically flexible functions. So they’re just more general and stronger at learning these things than anything that’s come before them. So we’ve created a platform around that.

that can then address any type of contract at all. And you can kind of span wherever you would like. So we are dealing in a bunch of different practice groups with a bunch of different firms on this.

Richard Tromans (10:46.842)

So it was also a degree of sort of, there’s a consulting input as well. So you have to go down and meet the firm and you have to say, right, what are the documents you want to focus on?

Doug (10:56.627)

Yeah, so that’s one of the benefits we believe is this is designed, purposely designed to fit into a law firm’s current workflow. So God knows there’s enough tools out there. And so we want to be able to seamlessly essentially remove what is currently manual effort, fit into an existing workflow. And since we’re API first, we can be seamless kind of behind the scenes for the attorneys using the output, at least to begin.

Richard Tromans (11:23.876)

Gotcha. mean, I mean, to my mind, know, ironically, the fact that you actually have to get in there, that they have to consciously engage with you at least to begin with is actually a good thing because what I’m seeing more and more of is people say, Hey, well, we bought some AI and I use it like maybe twice a day for like some small task, but it’s on the fringes of their experience because I haven’t really built it into their workflows. And it’s certainly not at that reliable point where they’ll just trust it.

because they haven’t put in that extra effort to integrate it into their lives, in part because you can get value so quickly from relatively small tasks. Again, ironically, the fact that you can get value very quickly from something very small almost disincentivize you from doing the hard work. It’s a bit like with Sora, you can make a five second video of some Star Wars like thing.

just by going, hey, make me a blah, blah, blah, blah, right? But that disincentivizes you from then sitting down and actually making the effort to actually make something for real.

Doug (12:30.37)

Yeah, and that’s kind of actually a good intro to where we see ourselves in the ecosystem. So a ton of legal tech. Many people are basically necessarily taking the wide but shallow approach because that’s what the models behind them do. And you’re right, can get value given the right task. You can get a lot of value very quickly out of that, but you can’t really get deep expertise and trust out of that. So we see ourselves

Richard Tromans (12:35.162)

Hmm.

Richard Tromans (12:42.618)

Mm-hmm.

Doug (12:57.804)

complimenting those approaches. And you’re right, you do have to get in. And one way to view what we do is we allow you to actually teach your expertise to these models and these systems, as opposed to relying on it, learning it somewhere else. And we allow you to do that.

Richard Tromans (13:14.021)

So, so, so interesting. got my brain going. mean, um, does that also involve preference? So if you’re doing a review, you know, obviously you’ve got identification, extraction and so forth, but when it comes to actually review and adding text, changing text, can you set preferences to the particular users or is that something you’d like to do? you know, so, you know, like someone said, you know, it’s a while ago, you know, you know, my AI system, every time it reviews anything, all of its answers.

sound like Chuck GPT. I want it to sound like me.

Chris Martin (13:48.121)

Yeah. So that’s actually one of the fun things that we’ve been showing our initial customers and they’ve gotten excited about is we, although often people think about this as data extraction or data structuring, we can also do pretty complex say classifications like buyer friendly, seller friendly, or good or bad according to a particular associate or partner. And so you can have for any given snippet of text, your own criteria for.

what that might be and the exact same process applies. So you could, for instance, train up a bunch of good versus bad models and then throw a contract in and see what proportion of the relevant provisions fall into each bucket.

Richard Tromans (14:32.121)

So to put it in sort of layman’s terms, it could red line off a playbook.

Chris Martin (14:39.152)

So we have not gotten into drafting specifically, just to be very clear. But yes, as part of a contract review process, could certainly, I know, for instance, large businesses often are primarily looking for, can I sign this contract or do I need to go back with another draft? And if those customers wanted to set up models to help them do that, that would work really well with our system.

Richard Tromans (15:08.794)

So just to wrap up, what would be a couple of classic use cases for a law firm or in-house team to be using this? would be a classic use case?

Chris Martin (15:18.798)

Yeah, so at its base level, we view ourselves as sort of the data layer for a law firm. And so currently, that is at the largest law firms being done manually by knowledge management attorneys or practicing attorneys. Once a deal is signed, you’re expected to answer a series of questions about that transaction, put that into a database, and that is then made available to the firm. So we, at the very smallest level,

automate that very manual process. But what we’re excited about is basically all firms currently, as you know, are buying these AI assistants. You know, there’s some big names, there’s some smaller names. And when you connect those to a store of trusted data, like the structured data that we can help firms generate, they become much more trustworthy. We can also provide links back to the document itself, including the specific text.

where a particular answer came from. And so…

Richard Tromans (16:20.826)

So, so, if it wouldn’t be any, I mean, it sounds like you could use it for a degree of knowledge management, refining that knowledge management that you have in your DMS. You could use it in litigation work for perhaps, I’m not quite sure how that would pan out with working with, with big database companies, but I guess it could. And then, you know, things like due diligence and writing, you know, creating the binders afterwards and so.

Chris Martin (16:46.582)

Exactly. Yeah. You know, there are basically any instance where you need to understand what is in a large corpus of contracts in a way that you can actually use that data down the road. We are we’re finding customers who are able to find value there.

Richard Tromans (17:03.502)

Gotcha. And I suppose on the in-house side, mean, again, you know, a whole bunch of similar contracts, perhaps for a particular type, you know, like licensing agreements or whatever, NDAs, et cetera. You could zip through those and pull out any inconsistencies or that kind of thing.

Chris Martin (17:21.27)

Exactly. Yeah, we were actually talking to a large insurance company the other day. They’re interested. get, you know, there’s a rise in plaintiff side AI tools as well, which is feeling an increase in, for instance, demand letters being sent to companies like gigantic insurers. And what they’re interested in trying out is, you know, can they feed a demand letter into our system and immediately get what they need to act on?

Richard Tromans (17:34.05)

That’s true.

in coming.

Richard Tromans (17:49.806)

Yeah.

Chris Martin (17:50.156)

rather than having an adjuster or an attorney review that every time.

Richard Tromans (17:54.426)

And the key to this is accuracy. mean, this is the thing, I mean, going slightly off piece, but this whole idea of large corporates being able to create rapid responses, the Achilles heel was not that they couldn’t do it rapidly, it’s that was it accurate? That was the real thing, wasn’t it? Because there’s no point having an automated system that will draw up a response if they’ve got some of the facts wrong.

Chris Martin (18:18.831)

Well, and it’s a tiny bit worse than that, actually. Even a tool that’s perfectly accurate, but where you can’t immediately trust that accuracy, doesn’t work either. Because then you’re going back to the demand letter, the contract, whatever it might be. Exactly.

Richard Tromans (18:31.5)

Wasting your time. Yeah, you go. So just I mean, I know it’s very subjective and you know, the ongoing debate about what is accuracy? Where is it relevant? That’s usefulness of other particular use cases where there is a yes, no answer, you know, so forth. It’s a broad philosophical subject, but just generally how you’re coming out on accuracy. So if I gave you a contract, if I’m cheesy, I give you a bunch of contracts and I say, right, extract all the stuff. don’t want any humans involved. Just

give me the output, what kind of results are we looking at?

Chris Martin (19:05.647)

Yeah, I mean, sorry, Doug, do you want to go?

Richard Tromans (19:08.391)

Aside from the initial training up and learning.

Doug (19:12.96)

Yeah, I mean, generally it depends on, it does depend a bit on the maturity of the training for that particular document and the particular type of data points. But, you know, out of the box, you know, as we deliver things to customers, they come in above 90 % on the accuracy that they measure. And as we train with them, it gets above 90%, 95%. And, you know, as we move forward, there’s no reason to believe this can’t just ask them to towards 100.

So guess one of the things that is very important, I think, to mention is currently, there’s no way to kind of make prompts better other than have people think more about what they should ask the machine. And that’s just, A, not very effective, and B, you’re always kind of squeezing a balloon. So we kind of think of it as squeezing a balloon. You’re trying to fit things in, and something else pops out the other side. To do it, our approach is more kind of traditional, but more reliable as a machine learning approach.

Richard Tromans (19:53.37)

Okay.

Doug (20:11.402)

trains at errors and doesn’t lose what it had before and just gets better over time.

Richard Tromans (20:15.002)

Yeah, yeah. Well, I mean, I mean, just to wrap up, mean, for me, the, you know, the trillion dollar question for the legal market is can we ever get to 99.9 % accuracy with, okay, you’ve got to put some human input up front. Okay, that’s fair enough. But if you can just trust the output, just, just like, you know, I will get on a plane with this. I do not care, you know.

Doug (20:38.56)

Yeah, and that’s kind of nice part about our platform is we believe that’s possible using our platform and we allow users to track that. So you can see the accuracy upfront over time, based upon your review of the documents. You can see how it improves over time and our customers also want to just trust it. And so there’s a set for which they reach a particular accuracy and you don’t need to look at it anymore. And those increasingly are the entire things that they’re getting out of the documents.

Richard Tromans (21:09.018)

Fantastic. And just very quickly, should have asked you at the beginning actually, but what will you spend the money on aside from a new Porsche?

Doug (21:15.866)

Yeah. So, right. So we’re super excited. We’re doing two things, essentially. So we are solidifying the foundation of our technology. So hiring up a bit on the machine learning implementation and then also scaling side and then, you know, dealing more with the increased customers. So we’re getting, you know, some account management sales and support for people.

Chris Martin (21:16.362)

Beautiful.

Richard Tromans (21:41.146)

GTMs and stuff. Fantastic. And soon, hopefully, you’ll be opening an office in London.

Doug (21:48.438)

That’s our hope. Yes, we have increasing reason to be out there. We love London, so that would be great.

Richard Tromans (21:54.33)

All right, guys, thank you, Chris, thank you, Doug, really interesting. And there’ll be other text around this video if you’d like to know more, but that’s all we have. Bye. All right, stop there.

Chris Martin (22:02.865)

Great, thanks so much.

Doug (22:03.362)

This was great. Thank you.

Legal Innovators Conferences in London and New York – November ’25

If you’d like to stay ahead of the legal AI curve then come along to Legal Innovators New York, Nov 19 + 20 and also, Legal Innovators UK – Nov 4 + 5 + 6, where the brightest minds will be sharing their insights on where we are now and where we are heading.

Legal Innovators UK arrives first, with: Law Firm Day on Nov 4th, then Inhouse Day, on the 5th, and then our new Litigation Day on the 6th.

Both events, as always, are organised by the Cosmonauts team!

Please get in contact with them if you’d like to take part.

Discover more from Artificial Lawyer

Subscribe to get the latest posts sent to your email.