This week tech giant Microsoft released what looks set to become a reference text for lawyers with an interest in AI. This epic paper (which you can download here) is titled ‘The Future Computed‘ and sets out the company’s vision of technological change and in particular how AI will affect all of us from a legal perspective.

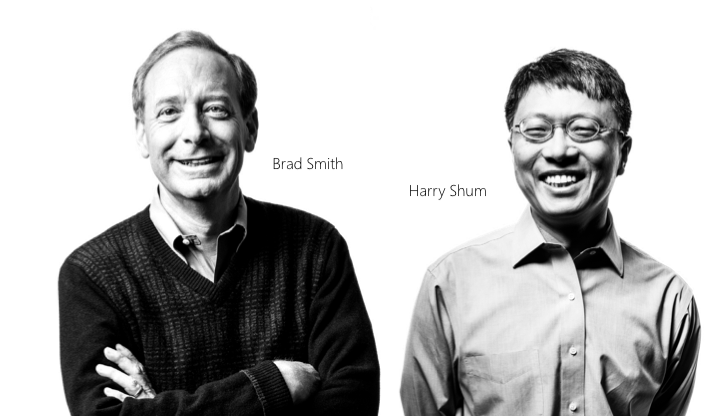

The authors, Brad Smith, President and Chief Legal Officer and Harry Shum, Executive Vice President, Artifcial Intelligence and Research spend a lot of time in this report considering how the law will intersect with AI. And one finding they feel is inevitable is that the field of ‘AI Law’ will become very real among the world’s lawyers.

They don’t specifically say big commercial law firms will have an ‘AI Law’ practice, but, that would seem to be a possibility in the future. Or, perhaps the AI issues will be integrated into other existing practice areas of firms today? What’s clear, from the report, is that in the future a law firm telling its clients that it has an ‘AI law sector group’ will be very, very normal.

Below is the TL/DR version of the 149-page report, or at least some of the bits Artificial Lawyer spotted that looked pithy and powerful.

Intro –

- Compared with the world just 20 years ago, we take a lot of things for granted that used to be the stuff of science fiction.

- Before long, many mundane and repetitive tasks will be handled automatically by AI, freeing us to devote our time and energy to more productive and creative endeavours.

- Will the future give birth to a new legal field called “AI law”? Today AI law feels a lot like privacy law did in 1998. Some existing laws already apply to AI, especially tort and privacy law, and we’re starting to see a few specific new regulations emerge, such as for driverless cars.

- But, AI law doesn’t exist as a distinct field. And we’re not yet walking into conferences and meeting people who introduce themselves as “AI lawyers.” By 2038, it’s safe to assume that the situation will be different. Not only will there be AI lawyers practicing AI law, but these lawyers, and virtually all others, will rely on AI itself to assist them with their practice.

- The real question is not whether AI law will emerge, but how it can best come together — and over what timeframe.

- A consensus then needs to be reached about societal principles and values to govern AI development and use, followed by best practices to live up to them.

-

One of the lessons for AI and the future is that we’ll all need to be alert and agile to the impact of this new technology on jobs.

While we can predict generally that new jobs will be created and some existing jobs will disappear, none of us should develop such a strong sense of certainty that we lose the ability to adapt to the surprises that probably await us. But as we brace ourselves for uncertainty, one thing remains clear. New jobs will require new skills. Indeed, many existing jobs will also require new skills. That is what always happens in the face of technological change.

Main Report –

- The design of any AI system starts with the choice of training data, which is the first place where unfairness can arise. Training data should sufficiently represent the world in which we live, or at least the part of the world where the AI system will operate.

-

It will be essential to train people to understand the meaning and implications of AI results to supplement their decision-making with sound human judgment.

- If the recommendations or predictions of AI systems are used to help inform consequential decisions about people, we believe it will be critical that people are primarily accountable for these decisions.

-

We believe the following steps will promote the safety and reliability of AI systems:

• Systematic evaluation of the quality and suitability of the data and models used to train and operate AI-based products and services, and systematic sharing of information about potential inadequacies in training data.

• Processes for documenting and auditing operations of AI systems to aid in understanding ongoing performance monitoring.

• When AI systems are used to make consequential decisions about people, a requirement to provide adequate explanations of overall system operation, including information about the training data and algorithms, training failures that have occurred, and the inferences and signi cant predictions generated, especially.

• Involvement of domain experts in the design process and operation of AI systems used to make consequential decisions about people.

• Evaluation of when and how an AI system should seek human input during critical situations, and how a system controlled by AI should transfer control to a human in a manner that is meaningful and intelligible.

• A robust feedback mechanism so that users can easily report performance issues they encounter.

- It seems inevitable that ‘AI law’ will emerge as an important new legal topic.

-

It seems likely that many near-term AI policy and regulatory issues will focus on the collection and use of data.

As policymakers look to update data protection laws, they should carefully weigh the benefits that can be derived

from data against important privacy interests.Another important policy area involves competition law. As vast amounts of data are generated through the use of smart devices, applications and cloud-based services, there are growing concerns about the concentration of information by a relatively small number of companies.

Relying on a negligence standard that is already applicable to software generally to assign responsibility for harm caused by AI is the best way for policymakers and regulators to balance innovation and consumer safety, and promote certainty for developers and users of the technology. This will help keep firms accountable for their actions, align incentives and compensate people for harm.

…..and there is plenty more.

Readers are recommended to have a look at the report, here, and decide for themselves. But, plenty there to get the legal world thinking. For vendors it looks like their internal legal needs will increase, for lawyers it could – as the authors implicitly suggest – see the rise of a new practice area called ‘AI Law’, and for us citizens and the governments of the world it will mean a debate about algorithms and power and privacy and control and much more, that will go on for many years to come.

One can really see this as just the next chapter in a long legal evolution that started the very first time a computer used a program to do something that affected a human being. The AI aspect just massively increases the impact.

Photo: Microsoft Corp, 2018.

1 Trackback / Pingback

Comments are closed.