Today, many of us in legal tech land are coming down from the excitement of the GLH2018, but, the world of legal AI has been quietly moving ahead as many across the world hacked away.

To that end legal AI pioneer, LawGeex, has just published a major report into an AI vs human lawyer challenge that it conducted recently, that shows just how accurate a well-trained NLP system can be compared to humans.

This is how the company explained their test: ‘In a landmark study, 20 experienced US-trained lawyers were pitted against the LawGeex Artificial Intelligence algorithm’.

‘The challenge focused on reviewing NDAs. The participants’ legal and contract expertise spanned experience at companies including Goldman Sachs and Cisco, and global law firms including Alston & Bird and K&L Gates. There was also a group of experts who advised on the test, and this included Dr. Roland Vogl, Executive Director of the Stanford Program in Law, Science, and Technology, and a Lecturer in Law at Stanford Law School.’

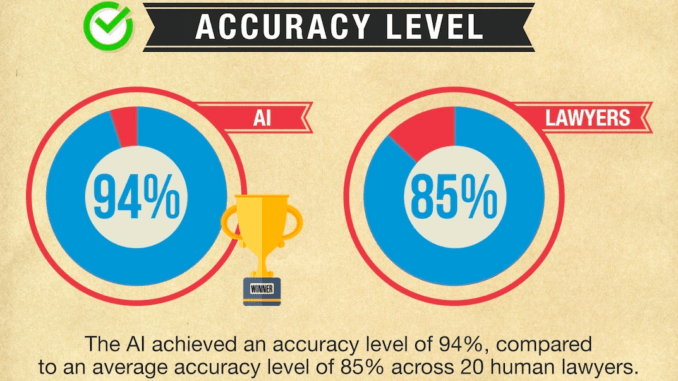

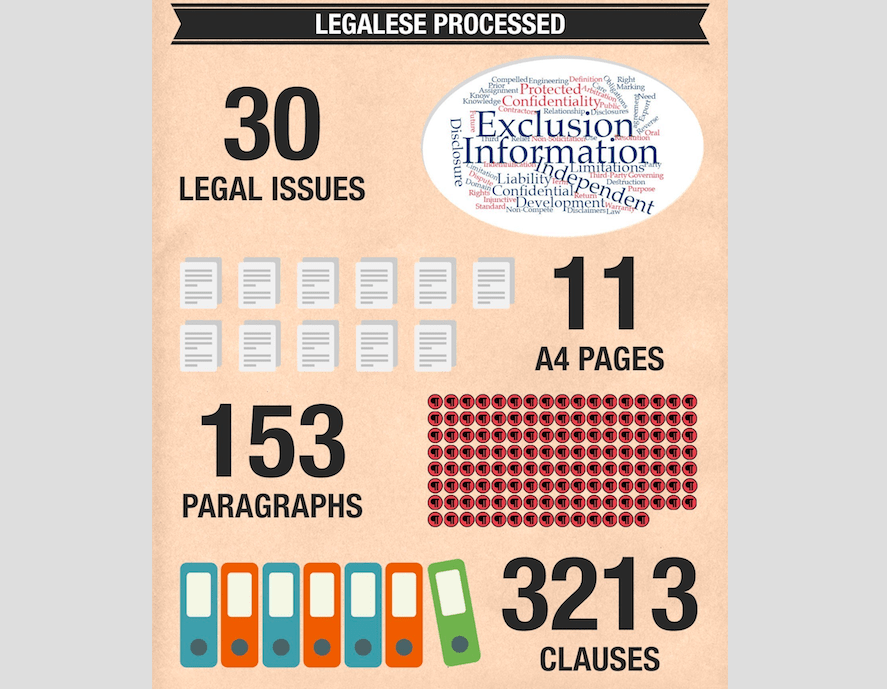

The study asked each lawyer to annotate five NDAs according to a set of Clause Definitions. Each lawyer was given four hours to find the relevant issues in all five NDAs.

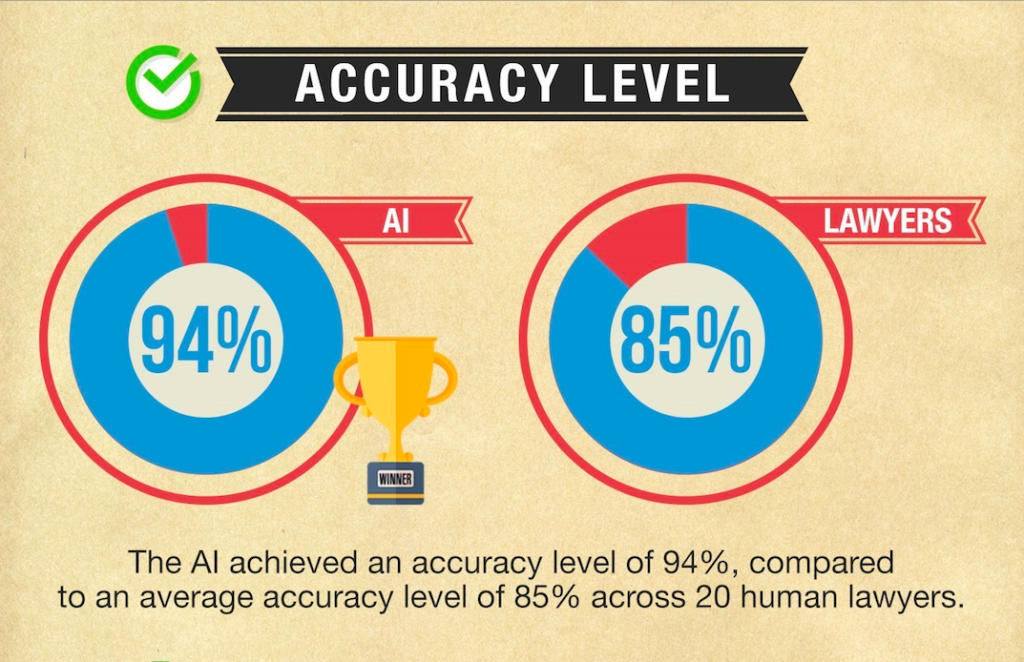

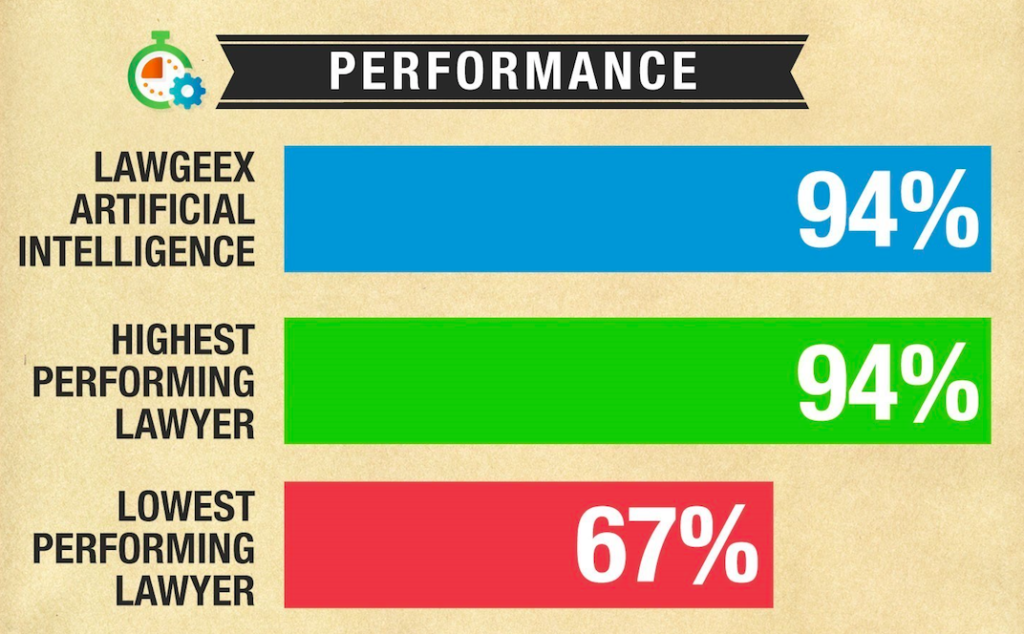

The contract review platform achieved a 94% accuracy rate at surfacing risks in the sample of never seen before Non-Disclosure Agreements (NDAs). This compared to an average of 85% for the experienced lawyers, said the company.

[N.B. Accuracy ratings in relation to NLP are an area of complex statistics involving issues such as ‘false positives’ and ‘false negatives’, as well as the concept of ‘recall’. Please check this out on Wikipedia, which might help you to learn more. Also, see notes at end: ‘How Results Were Calculated’ in Appendix A.]

Gillian K. Hadfield, Professor of Law and Economics at the University of Southern California, who also advised on the test, said: ‘This experiment may actually understate the gain from AI in the legal profession. The lawyers who reviewed these documents were fully focused on the task: it didn’t sink to the bottom of a to-do list, it didn’t get rushed through while waiting for a plane or with one eye on the clock to get out the door to pick up the kids. The margin of efficiency is likely to be even greater than the results shown here.’

‘This research shows technology can help solve two problems: both making contract management faster and more reliable, and freeing up resources so legal departments can focus on building the quality of their human legal teams,’ she added.

The full research has now been published in a report: ‘Comparing the Performance of Artificial Intelligence to Human Lawyers in the Review of Standard Business Contracts‘. You can download the report: here.

But, for those eager to see the best graphics from the report, please enjoy the below:

—

Appendix A: HOW RESULTS WERE CALCULATED

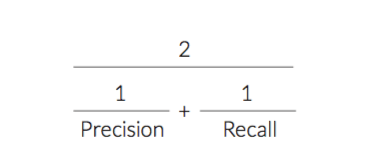

To mark the tests, consultant Christopher Ray measured the participants’ performance based on three metrics:

False Negative – an issue was missed

False Positive – an issue was misidentified

True Positive – an issue was accurately identified

This was then used to create three final metrics:

Recall: how many topics were accurately spotted in the right place out of the total number of topics possible to detect. As a standalone measurement, recall is insufficient as it allows one to achieve the maximum score through guesswork.

Precision: measures the number of correct answers made against the number of total answers given.

F-measure: the final accuracy score is the harmonic mean between Precision and Recall, calculated as follows:

Following two months of testing, the LawGeex Artificial Intelligence achieved an average 94% accuracy rate, ahead of the lawyers who achieved an average rate of 85%.

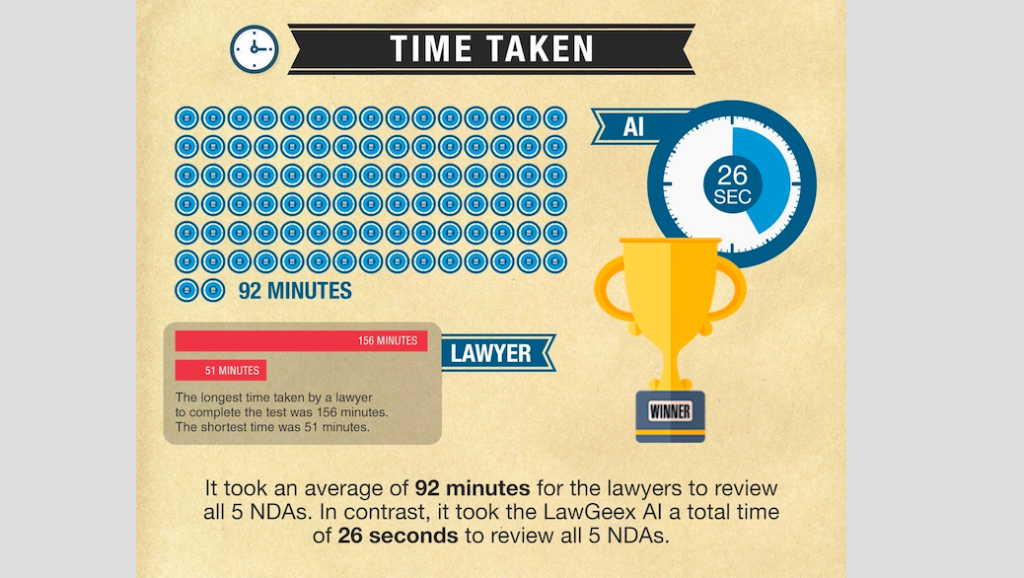

On average, it took 92 minutes for the lawyer participants to complete all five NDAs. The longest time taken by a lawyer to complete the test was 156 minutes, and the shortest time to complete the task by a lawyer was 51 minutes. In contrast, the AI engine completed the task of issue-spotting in 26 seconds.

8 Trackbacks / Pingbacks

Comments are closed.